Reviewed by Julianne Ngirngir

Samsung's One UI 8 beta has users discovering an unexpected twist in the company's famous moon photography saga. While Samsung officially launched the One UI 8 beta program on May 28, 2025, and testers are exploring refreshed apps and improved multitasking features, some are stumbling into what might be the most entertaining camera bug yet. Here's what makes this particularly fascinating: this glitch perfectly exposes the absurd complexity of modern smartphone photography, where even Samsung's own AI systems seem confused about when to enhance reality versus when to document it.

The moon controversy that won't quit

Samsung's moon photography has been a lightning rod since the Galaxy S21 series. The company's Scene Optimizer uses AI to detect the moon at 25x zoom or higher, then combines multiple frames with a "detail enhancement engine" to create those impossibly crisp lunar shots. This isn't simple sharpening—it's a sophisticated process where AI deep learning models trained on hundreds of moon images actively enhance details that may not exist in your original shot.

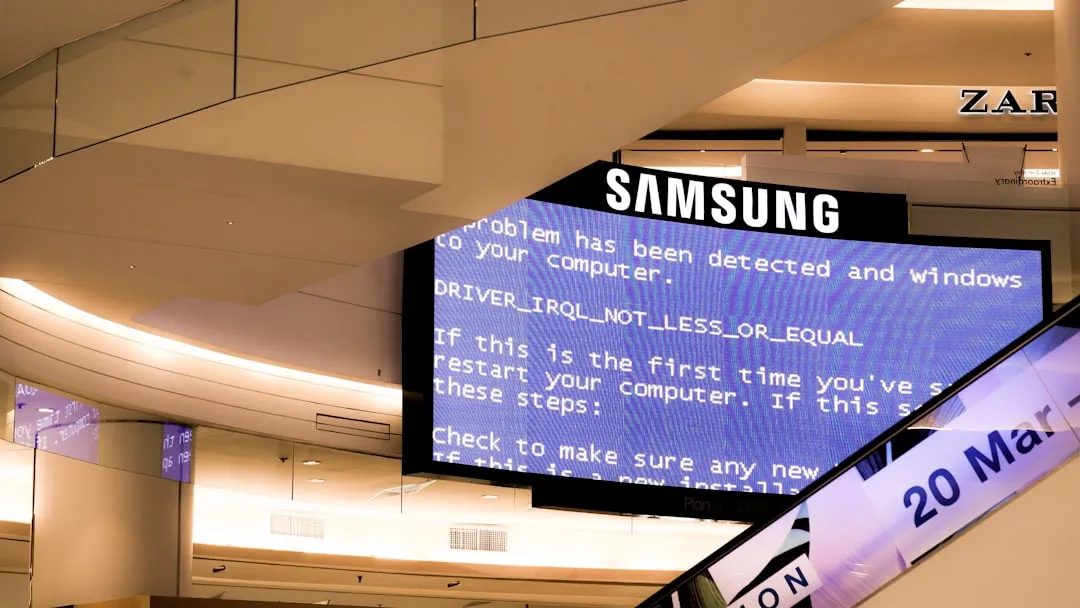

The controversy exploded when Reddit users proved Samsung's system adds details that weren't in the original image—photographing blurry moon pictures displayed on computer screens and watching Samsung's AI magically "enhance" non-existent crater details. Samsung's response has been telling: the company admits it's working to reduce confusion "between the act of taking a picture of the real moon and an image of the moon"—essentially acknowledging that their AI creates moon photos rather than just capturing them.

For Galaxy users, this means understanding what you're actually getting when you tap that shutter button. Your phone isn't just recording what the camera sees—it's consulting a neural network database of moon imagery and reconstructing what it thinks the moon should look like based on your blurry input.

What makes this One UI 8 glitch so perfect

The timing couldn't be more ironic. Samsung just spent years building increasingly complex AI photography systems across its Galaxy lineup. The Galaxy S25 series introduced AI camera filters with adjustable strength, color temperature, and contrast—features that automatically disable at higher zoom levels to avoid conflicts with other AI processing. It's a careful dance of multiple AI systems trying not to step on each other's computational toes.

But One UI 8 beta users are discovering their cameras apparently having philosophical disagreements about this whole enhancement business. While Samsung has been testing internal builds and pushing toward stable release, some beta testers report their moon detection algorithm triggering in situations that would make even Samsung's engineers chuckle.

What you're witnessing is the collision of Samsung's various AI photography approaches. The Scene Optimizer that powers moon shots operates separately from the newer AI filters, which work only at default resolution. When these systems get confused in beta software, the results reveal just how artificial our "smart" phone photography has become. It's like watching the man behind the curtain accidentally step into the spotlight.

PRO TIP: If you're running One UI 8 beta and experiencing unexpected camera behavior, check Settings > Camera > Scene Optimizer to disable AI enhancement temporarily.

Where do we go from here?

This One UI 8 beta quirk illuminates the broader question facing smartphone photography in 2025: when does computational enhancement become computational creation? Samsung has been honest about using AI to "recognize various moon phases" and apply "detail enhancement," but the line between enhancement and generation continues blurring.

For you as a Galaxy user, here's what matters: Samsung's One UI 8 Beta 4 released in July with camera stability improvements, though moon-related entertainment apparently wasn't considered a bug requiring fixes. The stable One UI 8 rollout expected in August will likely resolve these beta quirks, but the underlying tension between authenticity and AI enhancement remains.

The deeper insight isn't about Samsung's moon algorithm being "fake"—it's about how computational photography has redefined what phone cameras do. Every modern smartphone applies some level of AI processing to your photos, from HDR to portrait mode to noise reduction. Samsung's moon shots are just the most visible example of this transformation from photo capture to photo creation.

Rather than debating authenticity, consider this: Samsung's various AI systems getting confused in beta software is actually the most honest thing about smartphone photography. It reminds us that our "point and shoot" experience involves complex algorithms making split-second decisions about how reality should look. Sometimes those algorithms disagree with each other, and the result is comedy gold that perfectly captures the absurdity of modern mobile photography.

What you should know: Samsung's moon photos may involve AI reconstruction, but they're still more representative of actual lunar surface details than whatever entertainment the One UI 8 beta algorithms are currently providing. The real moon controversy isn't about fake photos—it's about transparent communication around what computational photography actually does to our images.

Comments

Be the first, drop a comment!